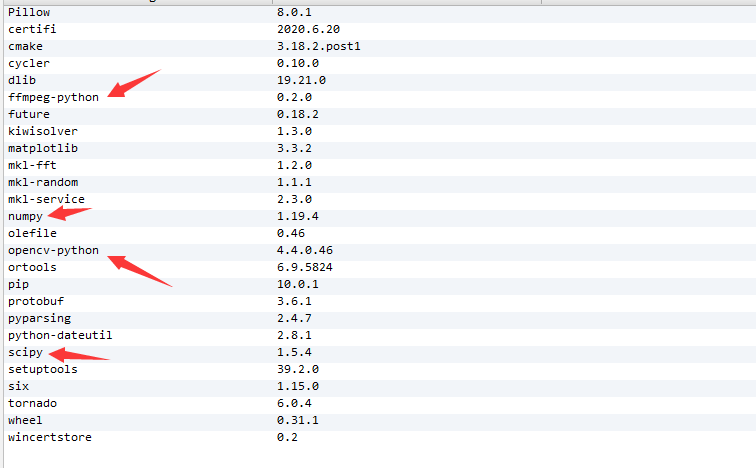

The next 420x360x3 bytes afer that will represent the second frame, etc. If the video has a size of 420x320 pixels, then the first 420x360x3 bytes outputed byįFMPEG will give the RGB values of the pixels of the first frame, line by line, top to bottom. Now we just have to read the output of FFMPEG. It can be omitted most of the time in Python 2 but not in Python 3 where its default value is pretty small. The following are 30 code examples of ffmpeg.input(). Pipe and OpenCV to FFmpeg with audio streaming RTMP in Python Ask Question 2 Im trying to stream FFmpeg with audio. In sp.Popen, the bufsize parameter must be bigger than the size of one frame (see below). Take care to replace PATHTOYOURVIDEOFILE with the path to your video and check to ensure your ffmpeg binary is located at /usr/bin/ffmpeg (you can do this by executing ‘which ffmpeg’ in your shell). Python bindings for FFmpeg - with complex filtering support - ffmpeg-python/README.

showinfo : When True invokes ffmpeg’s ‘showinfo‘ filter providing details about each frame as it is read. The format image2pipe and the - at the end tell FFMPEG that it is being used with a pipe by another program. To pull the duration of a video from any machine with FFMPEG and Python installed, run the following script. .openstream (showinfo, loglevel, hidebanner, silenceeventest). In the code above -i myHolidays.mp4 indicates the input file, while rawvideo/rgb24 asks for a raw RGB output. I work with multistream audio files in ffmpeg: ffmpeg -i stream1.m4a -i stream2.m4a -map 0:1 -map 0:2 out.m4a As I understand this has been discussed here this is supported. Import subprocess as sp command = pipe = sp.

0 kommentar(er)

0 kommentar(er)